Nowadays it seems that AI is being added to everything, from content creation platforms to mobile phones. I’ve even seen an AI powered toaster which monitors the “toastie-ness” of the bread to guarantee “the perfect piece of toast every time”. Seems we’re only one step away from “Talkie Toaster” from Red Dwarf. Phrases like “Now with AI” are becoming the go to marketing tag to indicate your platform, service, or toaster is cutting edge.

Aside from the practical applications of AI in end products, there is also a growing place for AI in more traditional creative, analytical and development roles. My background as CTO is obviously technical, so I have more of a leaning towards the application of AI in development. Mel, our Chief Experience Officer, is seeing a similar trend in her creative teams.

Using AI whilst developing

With the proliferation of programming languages, not to mention the constant evolution of frameworks, design patterns, API’s etc, means that it is impossible for one person to know everything. Software developers will have an area they focus or are more proficient at. However, even then you will hit a bug, or an issue, or a requirement that makes you stop and think. Historically you would spend a day contemplating the challenge. Or you could run it past a colleague, in more recent years you can post and search questions on StackOverflow. In today’s LLM / AI age, ChatGPT has the answers, or does it?

Just like with asking a colleague or searching StackOverflow, ChatGPT can inevitably give you incorrect and sometimes crazy answers. The oracle you’re asking is only as good as their own knowledge. In the past, a developer might trawl through this out of date, misleading and conflicting information and derive the nuggets of insight to let you solve your dilemma. The answer very rarely is already written for you, you have to take inspiration and then create your own solution.

With services like ChatGPT you can pose technical questions and receive, on the surface, great responses. They not only provide the code, but they also provide an explanation as to what the code is doing. However, we need to view the quality of this code through the same sceptical eye we would if we found a piece of code on StackOverflow. It’s like asking a colleague who thinks they know the answer but they tell it to you confidently so you blindly believe them. In the LLM / AI world this behaviour is called a hallucination, where the AI model generates incorrect or misleading information in response to a prompt.

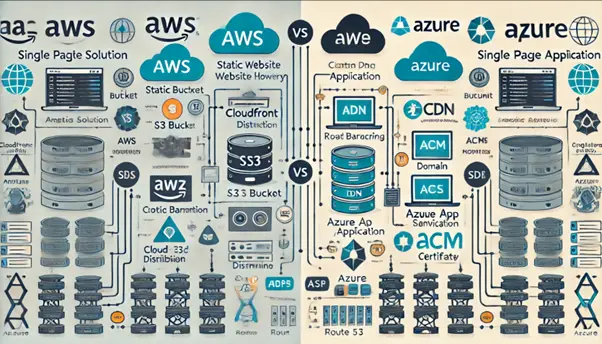

Just recently I was explaining a simple application’s architecture to a colleague. I’m used to the AWS landscape, but my colleague is more familiar with Azure. I asked ChatGPT for the Azure terms for things like S3, Cloudfront CDN etc. It was great and I was able to convey the architecture in Azure terms. I then asked if ChatGPT could draw a landscape diagram of the AWS vs Azure solutions. I was expecting a line drawing of a few interconnected nodes, instead I got this: Even though I asked the question and know the subject matter, I am still not 100% sure what this picture is showing. I don’t know what AWZ is, or “Crotic Barrantion”, never mind “Azuue App Sanvication”. To me, this is a great example of an AI hallucination.

Even though I asked the question and know the subject matter, I am still not 100% sure what this picture is showing. I don’t know what AWZ is, or “Crotic Barrantion”, never mind “Azuue App Sanvication”. To me, this is a great example of an AI hallucination.

It goes to show

“AI is only as good as the quality of the source material it was trained on.”

And if ChatGPT was trained on sites like StackOverflow, then there is a lot of noise to fight through to get those nuggets of information or insight. And sometimes, as with the above image, AI just seems to throw all caution to the wind and get creative.

All is not lost

That said, all is not lost. As part of my job, I still get my hands dirty with coding. More recently as part of our ongoing R&D activities. This means I am trying to solve challenges in creative new ways or prove out new concepts and capabilities. As a result, I am regularly stumped with development challenges. I find myself leaning more on ChatGPT than on StackOverflow for my investigations. I am also finding that I am spending more time in curating the questions that I am going to pose to ChatGPT. Gone are the short keyword specific search terms, trying to hit some magic SEO through Google. Now I am putting context into my question, explaining what I’m trying to accomplish, how it should fit into an existing workflow, what I’ve already tried etc.

Earlier this year I was talking to a customer at our company conference. And we commented that the complexity and thought that goes into phrasing questions to LLMs like ChatGPT is becoming a science in itself. So maybe my statement before that AI is only as good as the quality of the material it was trained on, is only half the story. The other half is that:

“The answers you get are only as good as the questions you ask”

Indeed, we’re now seeing jobs and training courses advertised for AI Prompt Engineers, whose job it is to tailor the best, most efficient and accurate prompt for an LLM. There are even LLMs that you can use to help you build questions to ask other LLMs.

It’s fascinating to see how AI is evolving, and where it is having an impact. I just hope companies, like the one producing the AI toaster, consider the long-term implications of AI in products. Otherwise, we could end up with neurotic products like “Talkie Toaster”, or automatic doors and elevators with “Genuine People Personalities” ala the Hitchhikers Guide to the Galaxy.